Running a wavelet computation on a \(CH_4\) molecule¶

The purpose of this lesson is to get familiar with the basic variables

needed to run a wavelet computation in isolated boundary conditions. At

the end of the lesson, you can run a wavelet run, check the amount of

needed memory and understand the important part of the output. We

propose to use python in a Jupyter notebook to simplify the use of the

bigdft executable and to have tools to combine pre-processing,

execution, post-processing and analysis.

Introduction: Running the code¶

As already explained in the N2 molecule

tutorial.bigdft uses dictionaries (a

collection of pairs of key and value) for the input and output which are

serialized by means of the yaml format. This allows us to manipulate

dictionaries instead of files and define simple actions on them for the

basic code operations, instead of manually modifying the input keys.

We here introduce the BigDFT.Inputfiles.Inpufile class, which is a

generalisation of a python dictionary and that has as class methods the

same methods that are available in the BigDFT.InputActions module.

In [1]:

#the dictionary below:

inp = { 'dft': { 'hgrids': 0.55, 'rmult': [3.5, 9.0]} }

# becomes

from BigDFT import Inputfiles as I

inp=I.Inputfile()

inp.set_hgrid(0.55)

inp.set_rmult([3.5,9.0])

Beside a default input file called input.yaml, BigDFT requires the atomic positions for the studied system and optionaly the pseudo-potential files. For the following tutorial, a methane molecule will be used. The position file is a simple XYZ file named CH4_posinp.xyz:

5 angstroemd0 # methane molecule free C 0 0 0 H -0.63169789 -0.63169789 -0.63169789 H +0.63169789 +0.63169789 -0.63169789 H +0.63169789 -0.63169789 +0.63169789 H -0.63169789 +0.63169789 +0.63169789

We can copy this file into the default posinp file posinp.xyz as

cp CH4_posinp.xyzz posinp.xyz or indicate it in the input

dictionary:

In [2]:

inp.set_atomic_positions('CH4_posinp.xyz')

The pseudo-potential files are following the ABINIT structure and are of GTH or HGH types (see the pseudo-potential file page on the ABINIT website for several LDA and GGA files and the page on the CP2K server for HGH pseudo for several functionals). The following files may be used for this tutorial: **psppar.C** and **psppar.H**.

Warning, to run properly, the pseudo-potential files must be psppar.XX where XX is the symbol used in the position file. The other files can have user-defined names, as explained in Tutorial-N2.ipynb lesson. If the code has been compiled with MPI capabilities (which is enabled by default), running BigDFT on several cores is as easy as run it as a serial job. There is no need to change anything in the input files.

Running BigDFT is done using the bigdft executable in a standard

Unix way. In this notebook, we use the SystemCalculator class:

In [3]:

from BigDFT import Calculators as calc

reload(calc)

study = calc.SystemCalculator() #Create a calculator

ch4 = study.run(input=inp)

Initialize a Calculator with OMP_NUM_THREADS=2 and command /local/binaries/gfortran-fpe-1.8/install/bin/bigdft

Creating the yaml input file "./input.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft

ch4 is the instance of the class Logfile which can handle easily all information coming from the output file log.yaml. Then we can display some information as:

In [4]:

print ch4

- Atom types:

- C

- H

- Cell: Free BC

- Convergence criterion on forces: 0.0

- Max val of Forces: 0.0214711014196

- Energy: -8.025214366429553

- Symmetry group: disabled

- fermi_level: -0.3414840466884

- Number of Atoms: 5

- Convergence criterion on Wfn. Residue: 0.0001

- No. of KS orbitals:

- 4

The wavelet basis set, a convergence study¶

Daubechies Wavelets is a systematic basis set (as plane waves are),

which means than one can increase arbitrarily the accuracy of the

results by varying some parameters which are defined in the dft

dictionary (inp['dft']). We here explain what are the meaning and

the typical values for the principal parameters, hgrid and

rmult.

CH4-grid

``hgrids`` are used to set up the basis set. In free boundary

conditions, the basis set is characterised by a spatial expansion and a

grid step, as shown in the side figure. There is ‘’one float value’’

describing the ‘’grid steps’’ in the three space directions (‘’i.e.’’ x,

y and z) or a 3D array is also accepted. These values are in bohr unit

and typically range from 0.3 to 0.65. The harder the pseudo-potential,

the lower value should be set up. These values are called hgrids in

the input dictionary, and can be set by the set_hgrid method of the

Inpufile class.

``rmult`` contains an array of two float values that are two

multiplying factors. They multiply quantities that are chemical species

dependent. The first factor is the most important since it describes

‘’the spatial expansion’’ of the basis set (in yellow on the figure

beside). Indeed the basis set is defined as a set of real space points

with non-zero values. These points are on a global regular mesh and

located inside spheres centered on atoms. The first multiplying factor

is called crmult for Coarse grid Radius MULTiplier. Increasing it

means that further spatial expansion is possible for the wavefunctions.

Typical values are 5 to 7. The second one called frmult for Fine

grid Radius MULTiplier is related to the fine resolution. This parameter

is less pertinent for the convergence of energy and can be ignored. It

is possible to indicate only one float value, the crmult parameter.

Such parameters can be set by the method set_rmult of Inputfile

class.

Exercise¶

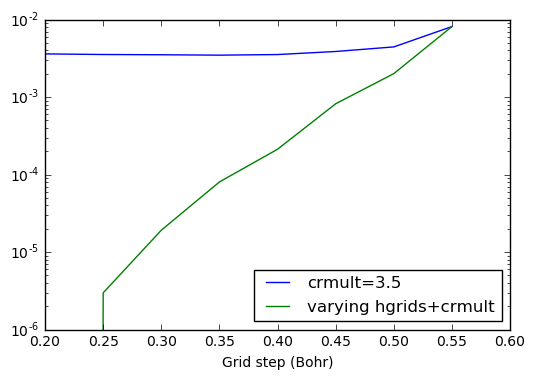

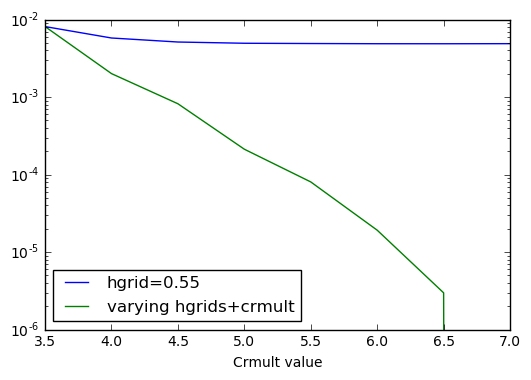

Run BigDFT for the following values of hgrid and crmult and plot the

total energy convergence versus hgrids. The final total energy can

be retrieved using th emethod energy from the result of each of the

runs. The unit of the energies is in Hartree. This tutorial also

explains how to use the Dataset class.

hgrids = 0.55bohr / crmult = 3.5 hgrids = 0.50bohr / crmult = 4.0 hgrids = 0.45bohr / crmult = 4.5 hgrids = 0.40bohr / crmult = 5.0 hgrids = 0.35bohr / crmult = 5.5 hgrids = 0.30bohr / crmult = 6.0 hgrids = 0.25bohr / crmult = 6.5 hgrids = 0.20bohr / crmult = 7.0

This precision plot shows the systematicity of the wavelet basis set: by improving the basis set, we improve the absolute value of the total energy.

Indeed, there are two kind of errors arising from the basis set. The

first one is due to the fact the basis set can’t account for quickly

varying wavefunctions (value of hgrids should be decreased). The

second error is the fact that the wavefunctions are constrained to stay

inside the defined basis set (output values are zero). In the last case

crmult should be raised.

Construction of the input dataset dictionaries¶

Let us build three different dataset of inputfiles, the first two

varying hgrid and crmult and the last by varying the two

toghether. We also label each of the input dictionaries by a unique name

identifying the run.

In [ ]:

from BigDFT import Datasets as D

h_and_c_dataset=D.Dataset('h_and_c')

for h,c in zip(hgrids,crmult):

inp_run=copy.deepcopy(inp)

inp_run['dft']['hgrids'] = h

inp_run['dft']['rmult'] = [c,9.0] #only change crmult

label_run='h='+str(h)+'c='+str(c)

h_and_c_dataset.append({'name': label_run,'input': inp_run})

In [20]:

import copy

hgrids = [ 0.55 - i*0.05 for i in range(8)]

crmult = [ 3.5 + i*0.5 for i in range(8)]

In [6]:

h_only_dataset=[]

for h in hgrids:

inp_run=copy.deepcopy(inp)

inp_run['dft']['hgrids'] = h

label_run='h='+str(h)

h_only_dataset.append({'name': label_run,'input': inp_run})

In [7]:

c_only_dataset=[]

for c in crmult:

inp_run=copy.deepcopy(inp)

inp_run['dft']['rmult'] = [c,9.0] #only change crmult

label_run='c='+str(c)

c_only_dataset.append({'name': label_run,'input': inp_run})

In [8]:

h_and_c_dataset=[]

for h,c in zip(hgrids,crmult):

inp_run=copy.deepcopy(inp)

inp_run['dft']['hgrids'] = h

inp_run['dft']['rmult'] = [c,9.0] #only change crmult

label_run='h='+str(h)+'c='+str(c)

h_and_c_dataset.append({'name': label_run,'input': inp_run})

We then define a function that runs the calculation written in the

dataset and store their result in a corresponding dictionary key

log:

In [9]:

def run_dataset(dataset):

for configuration in dataset:

if 'log' not in configuration:

configuration['log']=study.run(name=configuration['name'],input=configuration['input'])

#the same command could have been written as

#configuration['log']=study.run(**configuration)

We then run the three datasets:

In [10]:

run_dataset(h_only_dataset)

run_dataset(c_only_dataset)

run_dataset(h_and_c_dataset)

Creating the yaml input file "h=0.55.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.55

Creating the yaml input file "h=0.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.5

Creating the yaml input file "h=0.45.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.45

Creating the yaml input file "h=0.4.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.4

Creating the yaml input file "h=0.35.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.35

Creating the yaml input file "h=0.3.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.3

Creating the yaml input file "h=0.25.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.25

Creating the yaml input file "h=0.2.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.2

Creating the yaml input file "c=3.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=3.5

Creating the yaml input file "c=4.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=4.0

Creating the yaml input file "c=4.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=4.5

Creating the yaml input file "c=5.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=5.0

Creating the yaml input file "c=5.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=5.5

Creating the yaml input file "c=6.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=6.0

Creating the yaml input file "c=6.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=6.5

Creating the yaml input file "c=7.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n c=7.0

Creating the yaml input file "h=0.55c=3.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.55c=3.5

Creating the yaml input file "h=0.5c=4.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.5c=4.0

Creating the yaml input file "h=0.45c=4.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.45c=4.5

Creating the yaml input file "h=0.4c=5.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.4c=5.0

Creating the yaml input file "h=0.35c=5.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.35c=5.5

Creating the yaml input file "h=0.3c=6.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.3c=6.0

Creating the yaml input file "h=0.25c=6.5.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.25c=6.5

Creating the yaml input file "h=0.2c=7.0.yaml"

Executing command: /local/binaries/gfortran-fpe-1.8/install/bin/bigdft -n h=0.2c=7.0

We now store the energies of each of the dataset runs, and identify the

minimum as the minimum value from the h_and_c dataset:

In [14]:

import matplotlib.pyplot as plt

import numpy as np

energies_h=np.array([c['log'].energy for c in h_only_dataset])

energies_c=np.array([c['log'].energy for c in c_only_dataset])

energies_hc=np.array([c['log'].energy for c in h_and_c_dataset])

#find the minimum

emin=np.min(energies_hc)

We plot the energy values varying the grid spacing or the extension

In [21]:

%matplotlib inline

plt.xlabel('Grid step (Bohr)')

plt.plot(hgrids,energies_h-emin,label='crmult=3.5')

plt.plot(hgrids,energies_hc-emin,label='varying hgrids+crmult')

plt.yscale('log')

plt.legend(loc='best')

Out[21]:

<matplotlib.legend.Legend at 0x7f4c812f6b90>

In [22]:

plt.xlabel('Crmult value')

plt.plot(crmult,energies_c-emin,label='hgrid=0.55')

plt.plot(crmult,energies_hc-emin,label='varying hgrids+crmult')

plt.yscale('log')

plt.legend(loc='best')

Out[22]:

<matplotlib.legend.Legend at 0x7f4c812e0910>

Considerations¶

We see that both the parameters hgrids and rmult have to be

decreased and increased (respectively) in order to achieve convergence.

Increasing only one of the two parameter will eventually lead to

saturation of the absolute error on the energy.

In [ ]:

import matplotlib.pyplot as plt

import numpy as np

HtoeV = 27.21138386 #Conversion Hartree to meV

emin = HtoeV*results[-1].energy

ener = [ HtoeV*l.energy - emin for l in results ]

ener2 = [ HtoeV*l.energy - emin for l in res2 ]

plt.figure(figsize=(15,7))

# Plot with matplotlib

plt.plot(Hgrids, ener, marker='o', ls='-', color='#ff0000', label='varying hgrids+crmult')

plt.plot(Hgrids, ener2, marker='s', ls='-', color='#888888', label='crmult=3.5')

plt.yscale('log')

plt.xlim(0.55,0.25)

plt.xlabel('Grid step (Bohr)')

plt.ylabel('Total energy precision (eV)')

plt.title('Total energy convergence versus the grid step for the CH$_4$ molecule')

plt.legend(loc=3)

plt.show()

In [ ]:

Fine tuning of the basis set¶

The multi-scale property of the wavelets is used in BigDFT and a two

level grid is used for the calculation. We’ve seen previously the coarse

grid definition using the the multiplying factor rmult. The second

multiplying value on this line of the input file is used for the fine

grid and is called frmult. Like crmult, it defines a factor for

the radii used to define the fine grid region where the number of

degrees of freedom is indeed eight times the one of the coarse grid. It

allows to define region near the atoms where the wavefunctions are

allowed to vary more quickly. Typical values for this factor are 8 to

10. It’s worth to note that even if the value of the multiplier is

greater than crmult it defines a smaller region due to the fact that

the units which are associated to these radii are significantly

different.

The physical quantities used by crmult and frmult can be changed

in the pseudo-potential by adding an additional line with two values in

bohr. The two values that the code is using (either computed or read

from the pseudo-potential files) are output in the following way in the

screen output:

- Symbol : C #Type No. 01

No. of Electrons : 4

No. of Atoms : 1

Radii of active regions (AU):

Coarse : 1.58437

Fine : 0.30452

Coarse PSP : 1.30510

Source : Hard-Coded

- Symbol : H #Type No. 02

No. of Electrons : 1

No. of Atoms : 4

Radii of active regions (AU):

Coarse : 1.46342

Fine : 0.20000

Coarse PSP : 0.00000

Source : Hard-CodedAnalysing the output¶

The output of BigDFT is divided into four parts: * Input values are printed out, including a summary of the different input files (DFT calculation parameters, atom positions, pseudo-potential values…); * Input wavefunction creation, usually called “input guess”; * The SCF (Self-Consistent Field) loop itself; * The post SCF calculations including the forces calculation and other possible treatment like a finite size effect estimation or a virtual states determination.

The system parameters output¶

All the read values from the different input files are printed out at the program startup. Some additional values are provided there also, like the memory consumption. Values are given for one process, which corresponds to one core in an MPI environment.

In [ ]:

import yaml

print yaml.dump(ch4.memory,default_flow_style=False)

print 'Estimated Memory Peak',ch4.memory_peak,'MB'

The overall memory requirement needed for this calculation is thus: 39

MB (Estimated Memory Peak) which is provided by thememory_peak

attribute.

In this example, the memory requirement is given for one process run and the peak of memory will be in the initialisation during the Kernel calculation, while the SCF loop will reach 36MB during the Poisson solver calculation. For bigger systems, with more orbitals, the peak of memory is usually reached during the Hamiltonian application.

Exercise¶

Run a script to estimate the memory requirement of a run before

submitting it to the queue system of a super-computer using the

dry_run option.

It reads the same input, and is thus convenient to validate inputs.

Try several values from 1 to 6 and discuss the memory distribution.

In [ ]:

study = calc.SystemCalculator(dry_run=True) #Create a calculator

peak = []

for i in range(1,7):

study.set_global_option('dry_mpi',i)

dry = study.run(input=inp)

peak.append(dry.memory_peak)

print yaml.dump(dry.memory,default_flow_style=False)

for i,p in enumerate(peak):

print "mpi=",i+1,"Estimated memory peak (MB)",p

BigDFT distributes the orbitals over the available processes. The value

All (distributed) orbitals does not decrease anymore after 4

processes since there are only 4 bands in our example). This means that

running a parallel job with more processors than orbitals will result in

a bad speedup. The number of cores involved in the calculation might be

however increased via OMP parallelisation, as it is indicated in

Scalability with MPI and OpenMP

lesson.

The input guess¶

The initial wavefunctions in BigDFT are calculated using the atomic orbitals for all the electrons of the s, p, d shells, obtained from the solution of the PSP self-consistent equation for the isolated atom.

In [ ]:

print yaml.dump(ch4.log['Input Hamiltonian'])

The corresponding hamiltonian is then diagonalised and the n_band

(norb in the code notations) lower eigenfunctions are used to start

the SCF loop. BigDFT outputs the eigenvalues, in the following example,

8 electrons were used in the input guess and the resulting first fourth

eigenfunctions will be used for a four band calculation.

Input Guess Overlap Matrices: {Calculated: true, Diagonalized: true}

Orbitals:

- {e: -0.6493539153869, f: 2.0}

- {e: -0.3625626366055, f: 2.0}

- {e: -0.3624675839372, f: 2.0}

- {e: -0.3624675839372, f: 2.0} -- Last InputGuess eval, H-L IG gap: 20.6959 eV

- {e: 0.3980916655348, f: 0.0} -- First virtual eval

- {e: 0.3983087771728, f: 0.0}

- {e: 0.3983087771728, f: 0.0}

- {e: 0.5993393223683, f: 0.0}

The SCF loop¶

The SCF loop follows a direct minimisation scheme and is made of the

following steps: * Calculate the charge density from the previous

wavefunctions. * Apply the Poisson solver to obtain the Hartree

potential from the charges and calculate the exchange-correlation energy

and the energy of the XC potential thanks to the chosen functional. *

Apply the resulting hamiltonian on the current wavefunctions. *

Precondition the result and apply a steepest descent or a DIIS history

method. This depends on idsx, not specified in the present input. It

is therefore set to the default value, which is 6 (for an history of 6

previous set of vectors. To perform a SD minimisation one should add

“idsx: 0” to the dft dictionary of inp. * Orthogonalise the new

wavefunctions.

Finally the total energy and the square norm of the residue (gnrm) are

printed out. The gnrm value is the stopping criterion. It can be

chosen using gnrm_cv in the dft dictionary. The default value

(1e-4) is used here and a good value can reach 1e-5.

In [ ]:

print 'gnrm_cv by default',ch4.gnrm_cv

The minimisation scheme coupled with DIIS (and thanks to the good preconditioner) is a very efficient way to obtain convergence for systems with a gap, even with a very small one. Usual run should reach the 1e-4 stop criterion within 15 to 25 iterations. Otherwise, there is an issue with the system, either there is no gap, or the input guess is too symmetric due to the LCAO diagonalization, specific spin polarization…

In [ ]:

ch4.wfn_plot()

The post-SCF treatments¶

At the end of the SCF loop, a diagonalisation of the current hamiltonian is done to obtain Kohn-Sham eigenfunctions. The corresponding eigenvalues are also given.

In [ ]:

print ch4.evals

The forces are then calculated.

In [ ]:

print yaml.dump(ch4.forces,default_flow_style=False)

Some other post-SCF may be done depending on the parameters in the dft dictionary of inp.

Exercise¶

Run bigdft when varying the DIIS history length and discuss the

memory consumption.

Reducing the DIIS history is a good way to reduce the memory consumption when one cannot increase the number of processes. Of course this implies more iterations in SCF loops.

Adding a charge¶

BigDFT can treat charged system without the requirement to add a compensating background like in plane waves. The additional charge to add to the system is set in the dft dictionary with the qcharge key. In the following example an electron has been added (-1):

In [ ]:

inp3 = {}

inp3['dft'] = { 'hgrids': 0.55, 'nrepmax': 'accurate' }

inp3['posinp'] = 'CH3-_posinp.xyz'

Exercise¶

Remove the last hydrogen atom in the previous methane example and modify to add an electron. Then run BigDFT for an electronic convergence.

One can notice that the total charge in the system is indeed -8 thanks to the additional charge. The convergence rate is still good for this CH\(_3^-\) radical since it is a closed shell system.

In [ ]:

study = calc.SystemCalculator() #Use a new calculator)

ch3m = study.run(input=inp3)

print ch3m

ch3m.wfn_plot()

Running a geometry optimisation¶

In the previous charged example the geometry of the radical is kept the same than for the methane molecule, while it is likely to change. One can thus optimize the geometry with BigDFT.

To run geometry calculations (molecular dynamics, structure optimisations…) one should add another dictionary geopt in the input which contains the method to use.

In the log file, all input variables are indicated with their default value.

Here, we look for a local minimum so we can use the keyword LBFGS.

We can add also the stopping criteria. There are two stopping criteria:

the first ncount_cluster_x being the number of loops (force

evaluations) and the second forcemax is the maximum on forces. For

isolated systems, the first criterion is well adapted while the second

is good for periodic boundary conditions.

In [ ]:

inpg = {}

inpg['dft'] = { 'hgrids': 0.55, 'nrepmax': 'accurate' }

inpg['posinp'] = 'CH4_posinp.xyz'

inpg['geopt'] = { 'method': 'LBFGS', 'ncount_cluster_x': 20}

study = calc.SystemCalculator() #Use a new calculator)

ch4geopt = study.run(input=inpg)

In [ ]:

%matplotlib inline

ch4geopt.geopt_plot()

Exercise¶

Take the CH\(_3^-\) radical **CH3-_posinp.xyz** file and run a geometry optimisation.

The evolution of the forces during relaxation can be easily obtained

using the geop_plot function to the result of the calculation.

At each iteration, BigDFT outputs a file posout_XXXX.xyz in the directory data with the geometry of the iteration XXX. You can visualize it using v_sim (select all files in the “Browser” tab).